I’d like to preface this post by saying that it is valuable and important for our democracy to engage in meaningful critique of Australia’s education systems and processes, including its national curriculum. The keyword here is meaningful.

And, for full disclosure: In 2021, I was briefly seconded to ACARA to support the development of Version 9 of the Australian Curriculum: Science.

“It is… advisable that the teacher should understand, and even be able to criticize, the general principles upon which the whole educational system is formed and administered. He [sic] is not like a private soldier in an army, expected merely to obey, or like a cog in a wheel, expected merely to respond to and transmit external energy; he [sic] must be an intelligent medium of action” (Dewey, 1895; emphasis mine).

In a report released on Monday 27 November, educational reform consultancy Learning First claims to have conducted the first detailed benchmarking of the content of the Australian science curriculum against seven high-performing and comparable systems around the world.

The claim that this is the first benchmarking activity is just one of many errors in the report, which is rife with unsubstantiated claims, incomplete evidence, and gross assumptions, the most disturbing of which is that more “content” is a key indicator of quality curriculum (with the implication that good teaching is all about transmission of content).

Learning First is a company that claims to “build relationships… based on honesty, integrity and deep experience and expertise” and whose clients “include federal governments in various parts of the world.” Given their activities and clients, this report is worth scrutiny.

In this blog post, I will outline some of the issues with the report.

The 2018 PISA results do not reflect students’ learning of the Australian Curriculum: Science

This report uses the results of PISA (2018) to justify claims that the Australian Curriculum: Science isn’t of sufficient or adequate quality, and argues that the curricula of Alberta, Quebec, England, Japan, Singapore, Hong Kong and the US are. The use of this data to make such a claim is not appropriate. Correlation is not causation, particularly when what is supposed to be correlated wasn’t established at the time of the correlation!

First, the curricula included in the benchmarking are not reflected in the data. Let’s start with the Australian curriculum. The claim made by Learning First in Figure 2 of the report, that “2018 is the first PISA test when Australian students had been taught the Australian Curriculum for most of their schooling,” is erroneous. States and territories began implementing the Australian Curriculum from 2011 (in the ACT) with all states and territories implementing the Australian Curriculum – or some version of it – from 2015.

So, at best, some of the 15-year-old students assessed by PISA in 2018 had been engaging with the Australian Curriculum since 2013 – perhaps from Year 5 or 6 – but certainly not most of their schooling. The Australian Curriculum: Science was itself only implemented by most Australian states and territories from 2013.

Additionally, the version of the Australian Curriculum: Science that is critiqued in detail in the Learning First report was released in 2022, four years after the 2018 PISA.

Therefore, the results of the 2018 PISA do not significantly reflect students’ learning of science as described in the Australian Curriculum: Science.

Nor do the results of other countries in the 2018 PISA reflect their curricula. The NGSS (US) was released in 2013 but was not (and is not) adopted by all states, with only 36% of US students learning in systems that reference the standards. The Hong Kong Curriculum was released in 2017, just before the data were collected. Worse, the Singapore Syllabus Science for Year 7 to year 8 was released in 2020, while the Alberta Curriculum: Science is a 2022-2023 pilot; both well after the PISA data that are used to justify the overarching claims of the report were collected.

The results of the 2018 assessment do not significantly reflect students’ learning of science in any of the curricula compared. They cannot be used to argue that the Australian Curriculum: Science is of poor quality in comparison to other countries.

PISA assesses scientific literacy, not science content knowledge

PISA is an assessment of 15-year-old students’ scientific literacy, not their content knowledge. PISA “asks how well [15-year-olds] are able to apply understandings and skills in science, reading and mathematics to everyday situations” (ACER, 2016; emphasis mine). Other than Singapore, Australia actually performed comparatively with the other countries listed (mean scores in brackets): Japan (529), Canada (518), UK (505), Australia (503), and US (502).

As the report only benchmarks “science content” perhaps a better indicator of Australia’s performance would be the TIMSS assessment, which “looks at how well Year 4 and Year 8 students have mastered the factual and procedural knowledge taught in school mathematics and science curricula” (ACER, 2016; emphasis mine). So why doesn’t the Learning First report reference TIMSS at all? Perhaps it is because Australia’s performance in TIMSS was either similar to or better than the US, England and Hong Kong. Of the comparison countries, Australia’s performance in TIMSS was only statistically significantly lower than that of Singapore. Including this data would not fit with the narrative built by the Learning First report.

Flawed methodology and analysis of “content”

Technically, this isn’t a benchmarking exercise but a mapping exercise. The mapping methodology is extremely flawed and insufficient data is provided to substantiate claims. The methodology appears to be a comparison of a simple count of “items of content.” Its scope is limited to content only, and does not address the rationale or achievement standards; in terms of content, only one sub-strand (of three) of the Australian Curriculum: Science, Science Understanding, is examined to any great extent.

“Topic depth is based on a quartile analysis of the number of individual items of content in each topic. A topic was classified as being ‘in depth’ for a system if the number of content items within that topic was in the upper quartile” (Learning First, p20).

In this view, all content is considered of equal importance and contributes to “depth.”

Content in the Australian Curriculum is conceptual, not declarative. Content descriptions are only superficially engaged with by the authors of this report; this is how the curriculum would be read by someone who did not understand science or how to teach it. The cognitive demand associated with the content is not considered at all; nor is the role of the content in developing student understanding of core concepts. When we consider how students learn, not all content is created equal. When we consider the role of learning content in developing key ideas and core concepts, not all content is created equal.

For example, the report claims that evolution as a topic is not addressed until Year 10. This is not true. Evolution is a complex, nuanced, and challenging topic to learn. Understanding of evolution, and particularly the process of natural selection, is built upon understandings of the concepts of survival, interactions between living things and their habitats, ecosystems and ecology, adaptations, genetic variation, and patterns of inheritance; these ideas are sequenced and developed throughout the preceding years, starting at Foundation.

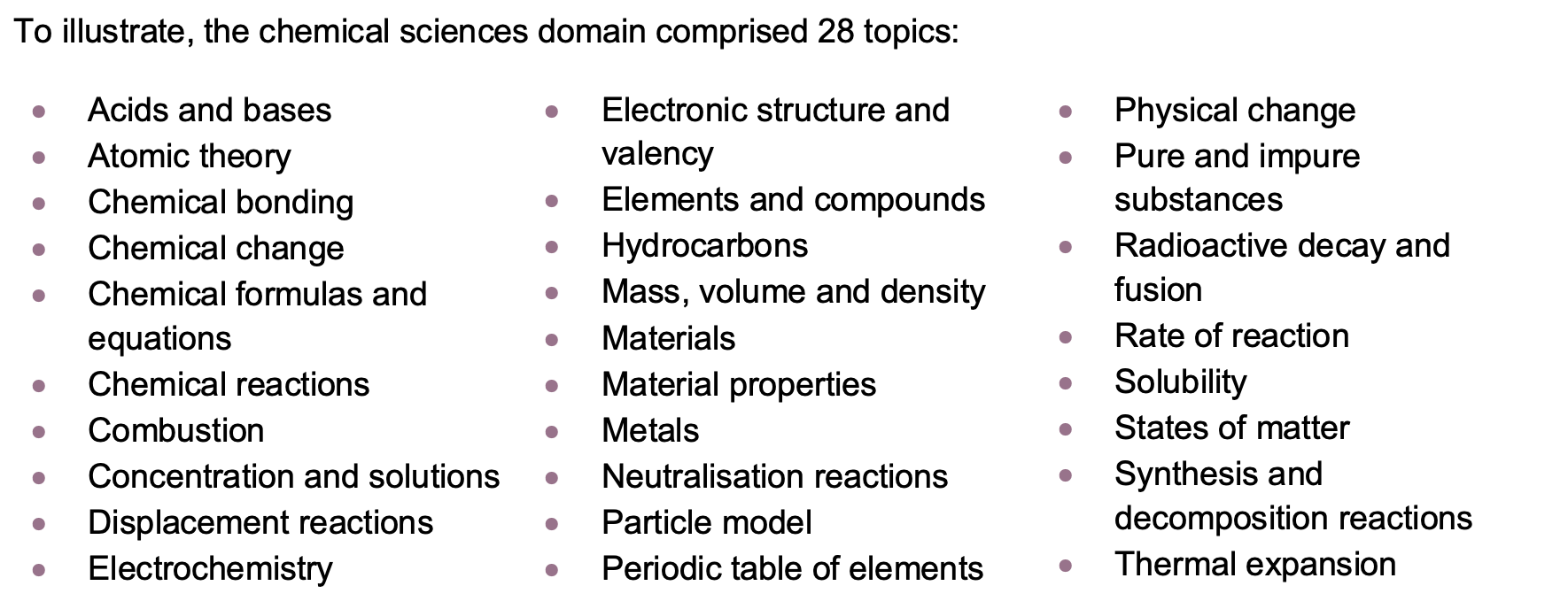

The database of topics is not provided as part of the report. However, a sample for the chemical sciences is provided in the appendix to the report, on p40. Here it is:

Source: Learning First

The identification of these topics was supposedly confirmed by curriculum experts, data analysts and science teachers. But the list raises more questions than answers. Why are materials covered separately to material properties? Why are metals singled out? This is the result of the bottom-up methodology employed, which began with “content items” (discrete pieces of science knowledge) and grouped them into topics.

In the Australian Curriculum, Science is not taught in isolation

The report shows no evidence of understanding of how the Australian Curriculum: Science is structured. Content descriptions within Science Understanding are viewed by the authors of the report in total isolation of the curriculum aims and rationale, the other strands (Science as a Human Endeavour and Science Inquiry), and the achievement standard. In fact, in the analysis of the Science Inquiry strand, Table 1 conflates curriculum organisers (the sub-strands) with “scientific skills”.

Further, some of the content they complain is missing from the Australian Curriculum: Science is addressed in other learning areas or cross-curricular priorities. (The third dimension of the Australian Curriculum, the general capabilities, which includes literacy, numeracy, critical and creative thinking, ethical understanding, and personal and social capability, all relevant to learning science, are clearly not considered important in the Learning First report, which emphasises content over any other outcome that may be achieved through the teaching and learning of science.) For example, the topics of reproduction and the spheres of the Earth, which Learning First claim are missing from the curriculum, are included in the Health and Physical Education curriculum in the Humanities and Social Sciences curriculum. Why duplicate content in a curriculum that is already considered “overcrowded”?

Teachers don’t teach curriculum, they teach their students science

This approach to curriculum is problematic because it assumes that a teacher’s role is to transmit content, and the role of the curriculum is to tell teachers which content to transmit. The curriculum outlines students’ entitlement to learning; that is, it is expected that students are learning everything that is described in the curriculum. It is almost as though the authors of the Learning First report believe that without a comprehensive list of declarative statements, teachers will not know what or how to teach science. But a teacher is not an automaton, or an AI bot. They are “an intelligent medium of action” (Dewey, 1895). A teacher reads the curriculum and interprets it in the context of their students’ prior knowledge and conceptions; their understanding of how new ideas are learned; their teaching philosophy and pedagogical knowledge of teaching, learning, and development; and their understanding of the discipline itself (this domain of professional expertise, developed through both formal study and experience, is called pedagogical content knowledge or PCK; Shulman, 1986; Grossman, 1989).

In many cases, the content descriptions that Learning First complain are insufficiently detailed cannot be taught effectively without the very details they seek to list. For example, Learning First assert that the teaching of cells is insufficient because the curriculum does not list as necessary the teaching of cell organelles and their functions. Yet a secondary science teacher knows that one cannot teach students about the structure and function of cells without teaching them about cell organelles and their functions. And this teacher will design or adapt existing learning experiences to meet their students’ learning needs in this area.

A teacher seeking additional guidance can explore the Australian Curriculum: Science content elaborations, which provide details of “ways to teach the content descriptions” that might enhance students’ learning (ACARA). These elaborations include much of the content that the Learning First report complains is needed. Just like the content descriptions, which specify the “essential knowledge, understanding, and skills that students are expected to learn,” the content elaborations are not mandated, but nor are they a set of “complete or comprehensive content points” (ACARA). It’s appropriate that these content elaborations be optional, to allow teachers to design effective learning experiences for their students, in their teaching and learning context.

If this report is so poorly researched, why is it necessary to critique it at all?

The goal of an Australian Curriculum was to pool Australia’s intellectual and economic resources to develop an internationally competitive national curriculum, sampling assessments, and resources. Instead, politicians react to the news of “poor” international test results with curriculum reviews and revisions. Critique of reports such as this are necessary to prevent reactionary responses.

The report authors claim that “if the science curriculum is lacking in content, quality, sequencing and depth, that could be true for other subjects…” While a long bow to draw, this signals an intention to “benchmark” other learning areas of the Australian Curriculum also. If so, the approach for assessing the "quality" of the Australian Curriculum in future reports must be significantly revised to have any credibility or usefulness.

The amount of prescribed content is not a metric for curriculum quality. There is no describable body of content that is the “best” content for curricula to include. It is not important that all students are taught exactly the same content, down to the minutia of the wording to describe the function of endoplasmic reticulum. We cannot spend every minute of everyday teaching content. And good teaching is more than teaching content! Yet all these assumptions go largely unarticulated, and certainly unquestioned, in this report.

What makes a quality curriculum?

A quality curriculum is not a textbook. It is not a syllabus. It is not a series of checklists that can be addressed through endless worksheets. Curriculum is not a replacement for quality teaching and learning resources, and certainly not for teacher expertise.

A quality curriculum considers the learning entitlement of all students: those who will pursue STEM pathways as well as those who won’t. A quality curriculum recognises that diverse students and classrooms require a range of pedagogic approaches and makes space for that diversity. A quality curriculum provides opportunities for teachers to design learning experiences that are relevant, sufficient, and appropriate for their students.

A quality science curriculum considers how it supports students’ developing scientific literacy, and a positive science identity (Fensham, 2022). If we are serious about addressing declines in numbers of students pursuing STEM, we need a curriculum that enables rich engagement and reflexive and effective pedagogy.

A focus on core concepts and key ideas is pivotal to this approach. The Australian Curriculum: Science is underpinned by ten core concepts (similar to, but distinct from, The Big Ideas of Science). There are also understandings about science and how it works that students learn in Science; these are learned not through content but with content as the context for collaborative investigation and argument.

This report does a disservice to teachers, by undermining their professional expertise and experience. It does a disservice to education, by undermining the value of curriculum and misrepresenting its quality. But it also does a disservice to you, the reader, in assuming that you’ll accept its claims at face value, without considering the spuriousness of its claims, evidence, and reasoning. Meaningful critique of the Australian Curriculum: Science would be welcome; this is not it.

Further reading

The Australian Curriculum is copping fresh criticism – what is it supposed to do? (The Conversation)

Are Australian students really falling behind? It depends which test you look at (The Conversation)

References

Fensham, P. J. (2022). The future curriculum for school science: What can be learnt from the past?. Research in Science Education, 52(Suppl 1), 81-102.

Grossman, P. L. (1990). The making of a teacher: Teacher knowledge and teacher education. New York: Teachers College Press.

Jensen, B., Ross, M., Collett, M., Murnane, N., & Pearson, E. (2023). Fixing the hole in Australian education: The Australian Curriculum benchmarked against the best. Learning First.

McLellan, J. A., & Dewey, J. (1895). What psychology can do for the teacher. In J. A. McLellan & J. Dewey, The psychology of number and its applications to methods of teaching arithmetic (pp. 1–22). D Appleton & Company.

Shulman, L. S. (1986). Those who understand: Knowledge growth in teaching. Educational Researcher, 15(2), 4-14.